Massimo

Leone

The Meaning

of the Face in the Digital Age

Issue #02 — Transforming Environments

The distinction between genuinely human characteristics and artificial creations is increasingly blurred by the spread of artificial intelligence — especially in urban spaces, where the interaction between humans and AI is at its strongest.

HIAS fellow Massimo Leone researches on the semiotic, cultural, and philosophical-ethical dimensions that characterise the uniqueness of human faces in contrast to their artificial imitations. «Facets of the Face» — this was the title of the interactive exhibition he organised at HIAS as part of this year’s Hamburger Horizonte. Using significant examples, Massimo Leone analysed the semiotics of imitation, the uniqueness of the human being and the connection between AI and deep fakes.

Shortly before the event, he met with Lutz Wendler, cultural journalist at the Hamburger Abendblatt, for an interview. Here is an excerpt:

«The face is something commonplace — and mysterious at the same time.»

Massimo, you have been a Fellow at HIAS in Hamburg since September. To kick things off, you have put together a small interactive exhibition for the Hamburg public entitled «Facets of the Face», which shows what you and your international team have been researching on for the past five years. You made it clear how ubiquitous facial recognition through AI is already in our lives — irritations included…

The face is something commonplace — and mysterious at the same time. In the exhibition, we wanted to de-familiarize people from their own faces, i.e. from something they think they know very well. We wanted to encourage them to think about what constitutes their face and what has been lost in the excessive use of the face through digital technology. At the same time, we didn’t just want to scare them, but also give them hope for the future.

Your research project on the topic of «The meaning of the face in the digital age» is global in scope. What specific insights do you hope to gain from your research in Germany — and Hamburg?

The topic of the face and artificial intelligence is viewed differently depending on society, history and culture. In Germany, there is a particular sensitivity to the collection of data. It is a country that pays great attention to the issue of data protection and privacy. That’s why I want to investigate how people here relate to AI and facial recognition. Hamburg is a metropolis, and I am curious to see how privacy, ethical standards, and traditions can be reconciled with the use of digital technologies in this setting. My research in Hamburg is part of a comparative study that will lead to a larger publication. Another German reference for us as a comparatively small city is Freiburg.

«

The pandemic was a moment of crisis for the face. We were forced to cover part of our face and understood how important the faces of others are to us.

»

How has the pandemic, which began shortly after you started your research on the face in the digital age, influenced your work?

The pandemic was a moment of crisis for the face. We were forced to cover part of our face and understood how important the faces of others are to us. There was a boom of technology used in digital exchange to replace the face in public and private interactions. That’s why I’m interested in what’s left of this technological change. Generally speaking, we have never returned to the way we were before the pandemic. We meet each other face to face less than before.

Are you missing that?

I am neither technophobic nor culturally pessimistic and therefore do not share the sceptical attitude of those who believe that more digital interaction is destroying communities. However, it is clear that technological change is definitely changing our sense of what a community is and how a community functions. Just one example from academia that might apply to many working people: I used to meet my colleagues weekly, now it’s monthly. We usually only see each other face to face on the screen. What I miss is the direct contact, the context, the place where you meet, have a coffee and chat with someone — that’s very Italian. There is the digital space where you are active, and there are other spaces that you use in your life, but what is missing are the spaces in between, the no man’s land in which we were used to move as well. And that was important in order to create less static, more fluid relationships between people. When you meet colleagues in the digital space, they are colleagues — you don’t perceive the person next to their professional life in the exchange via the screen, you don’t gain any insights into their personal life.

«

In the USA, the market is driving the use of AI and big business is leading the development. China, on the other hand, envisions political control; AI is being developed in relation to the political goals of the political establishment.

»

You conduct research worldwide. What global trends do you see in the use of AI?

The very different approaches to the use of AI in the USA and China are undoubtedly formative. The question that concerns Germany and Europe is whether there is a specific path to AI of our own, whether we can construct a European approach to the development of AI that can be competitive on a global market.

How do you interpret the current competition?

At the moment, it’s a bit Wild West — and Wild East. In the USA, the market is driving the use of AI and big business is leading the development. China, on the other hand, envisions political control; AI is being developed in relation to the political goals of the political establishment. And Europe, as a potential challenger, is basically striving for normative, i.e. legal, control of the new technologies; the law as an expression of a certain ethic should guide the development of AI. This leads to various problems. In the USA, for example, possibilities are being explored regardless of the consequences. If you read interviews with AI tycoons like Sam Altman, they engage in a paradoxical discourse because they constantly warn of the dangers of AI for humanity and at the same time collect money to push research even further. In fact, we need to think AI through carefully and develop it cautiously because it is so powerful. In the EU, the guiding principle is that AI should not be detached from a certain idea of humanity. From a commercial perspective, however, this humanistic understanding is a limit that makes global competition more difficult.

«

I am more optimistic than pessimistic about the benefits of

AI for humanity.

»

Do you think it is possible for AI to be used in a thoughtful way that can hold its own in international competition? You yourself work together with computer engineers for a private company in Bologna that develops AI applications.

I am more optimistic than pessimistic about the benefits of AI for humanity. Ultimately, it depends on us what we make of it. It depends on how we work together. And it should always be clear to us that AI content is never value-free, because it is already a product of the past that cannot simply be repaired. I would like to illustrate this with the example of CGI, which produces computer-generated images that appear to be visual truths, but which are based on huge image databases whose individual components may be questionable because they also contain errors and forgeries or have been guided by prejudices — so something false is added to what is supposed to be true. In other words: There is also evil hidden in our AI applications because humanity has provided the input. In contrast, I hope to establish ethical criteria by design in the technology, i.e. in the algorithm.

How can this work?

In a way, this is impossible if we continue to separate the knowledge of the philosopher from that of the engineer. Instead, we need quasi-renaissance engineers, humanistic technologists who are aware of the risks of what they are doing. And our philosophers and ethics specialists would also need to know more about technology if they want to make competent statements about it.

What could AI ethics look like?

Very simple. Before AI developers send their ideas out into the world, they should try them out in the sandbox, so to speak, and play with them in order to recognize dangers early on. But unfortunately, they usually don’t want to wait because the market is so fast – so they immediately pass their algorithms on to the public and are supported by venture capital for rapid commercialization.

«

The face in China, unlike in Western societies, is not a strong expression of uniqueness — the social face is more important than the physical face.

»

You also teach and conduct research in Shanghai. How do you experience control there with the help of AI?

Facial recognition is omnipresent there. It’s clear that it’s a massive surveillance operation because all this data could be used by government forces. However, there is another aspect that we in the West often don’t realize. The face in China, unlike in Western societies, is not a strong expression of uniqueness — the social face is more important than the physical face. An interesting example of this was the use of masks during the pandemic. Unlike in Western societies, their use was uncontroversial in Japan, Korea or China. These countries have different political systems, but they have a very similar understanding of the position of the individual in society. So if you wear a mask in China, it’s not because you want to protect yourself from others, but because you want to protect others from yourself. Knowing this is important for understanding how AI is used in China. And it is also important to know that the significance of new technologies in Southeast Asia is different from ours, which also has to do with religious attitudes. A Buddhist, for example, has no problem with new technology; it manifests itself as a religious force in Buddhist understanding.

How important are your own impressions when you explore a foreign city?

They are fundamental. One of my first activities is to walk around and look for faces in the streetscape — how and where there are faces and how they are represented. We take faces in the cityscape for granted, but in cities in North Africa or cities that are deeply influenced by Islamic culture, you will hardly see faces because in these traditions the representation of the human body is not taken lightly. We take for granted that our heroes are represented sculpturally as statues, but this concept does not exist in Islamic civilizations. We, on the other hand, represent everything with a face, because Christianity manifests itself in incarnation, and this has a face.

And what is your attitude to your own face?

I realize how unrecognizable our face is to ourselves. We can only visually perceive our face indirectly: in the mirror, in pictures or in movies. Although the face has been the most important link in social interaction since we were born, we have to accept that this interface remains hidden from us. Although our face is so important, we ourselves do not know it.

Interview by Lutz Wendler

Massimo Leone

is Professor of Philosophy of Communication and Cultural and Visual Semiotics at the University of Turin. In the academic year 2024–25, he is a University of Hamburg Fellow at HIAS, where he will continue his research on «The Meaning of the Face in the Digital Age», which he has been conducting for five years in the framework of a European ERC research project. The academic global player seems to feel at home at many different places of the earth, as well as in the digital world. The 49-year-old Italian also teaches and researches at Shanghai University, is an associate member of Cambridge Digital Humanities at Cambridge University, Director of the Institute for Religious Studies of the «Bruno Kessler Foundation» in Trento, and a lecturer at the Catholic University of Caracas in Venezuela.

Image Information

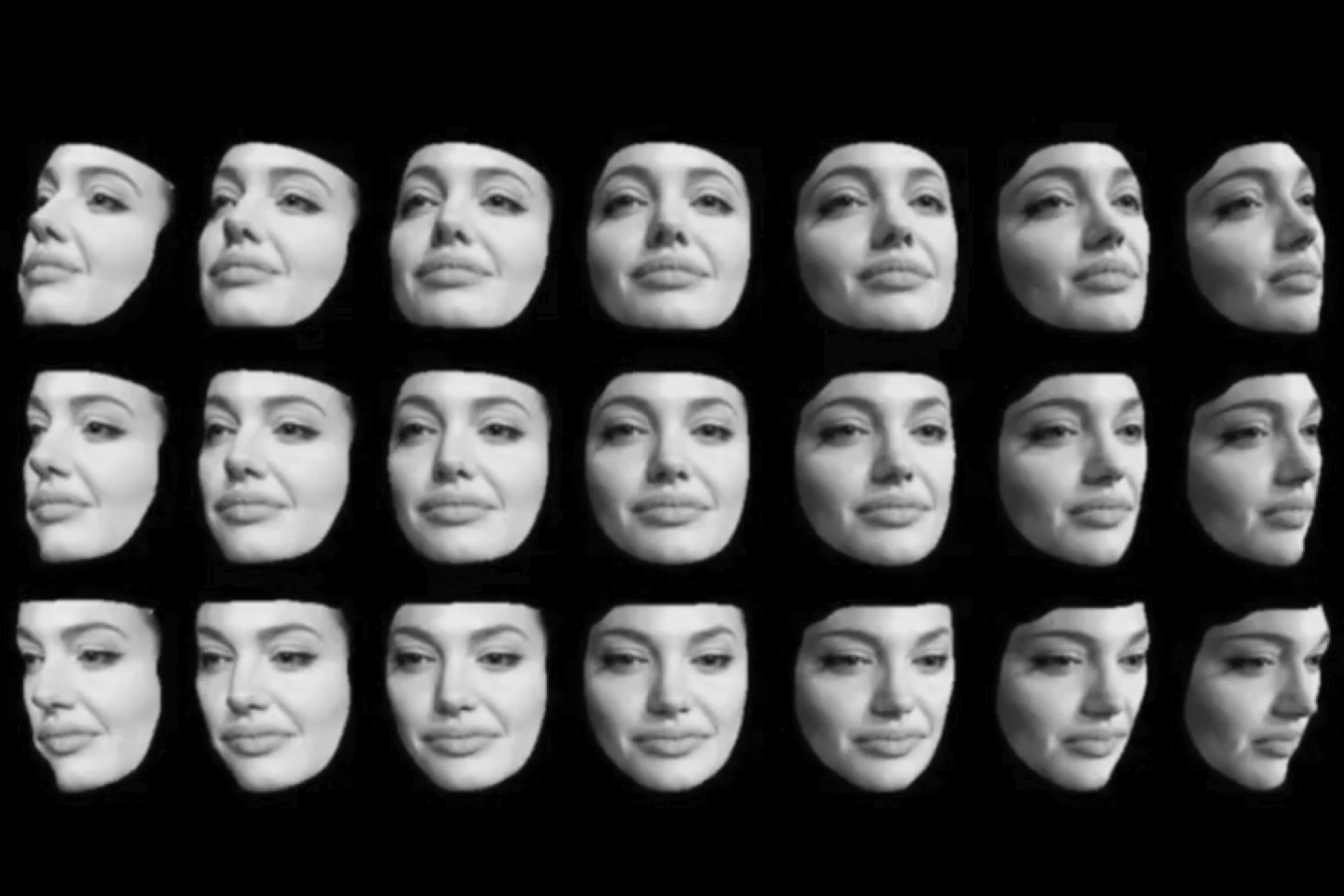

1 (cover). Digital reconstruction and multiplication of Angelina Jolie’s face; in Marios Savvides. 2018. “Advanced Facial Recognition Technology”, YouTube Channel of the World Economic Forum; available at https://youtu.be/U15FWjOWqgQ?si=23y8XRhPC_bfHyPB (minute 3:51) (last access 6 November 2024)

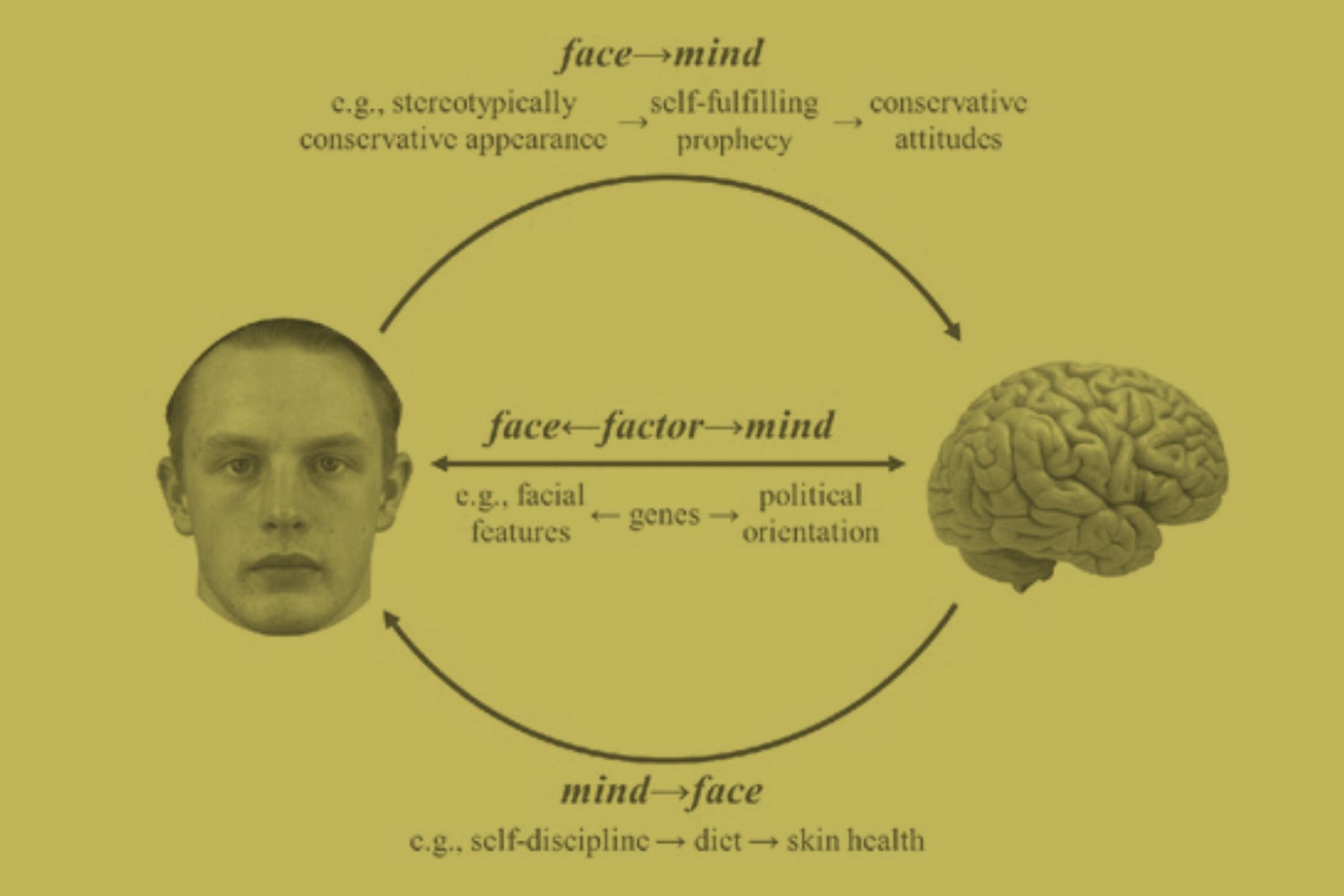

2. Diagram of process for detecting sexual orientation through automatic face recognition; in Wang, Yilun and Kosinski, Michal. 2018. “Deep Neural Networks Are More Accurate than Humans at Detecting Sexual Orientation from Facial Images”, 246-57. Journal of Personality and Social Psychology, 114, 2; DOI: https://doi.org/10.1037/pspa0000098

3. Same as 1.

4. Map of surveillance cameras in Dammtor, Hamburg; retrieved from “Surveillance under Surveillance”; https://sunders.uber.space/ (last access 6 November 2024)

5. Anti-facial recognition mask by Leonardo Selvaggio; retrieved from http://leoselvaggio.com/ (last access 6 November 2024)

6. Fight for Future activists provocatively use automatic face recognition in Washington, DC; retrieved from https://fightfortheftr.medium.com/ (last access 6 November 2024)

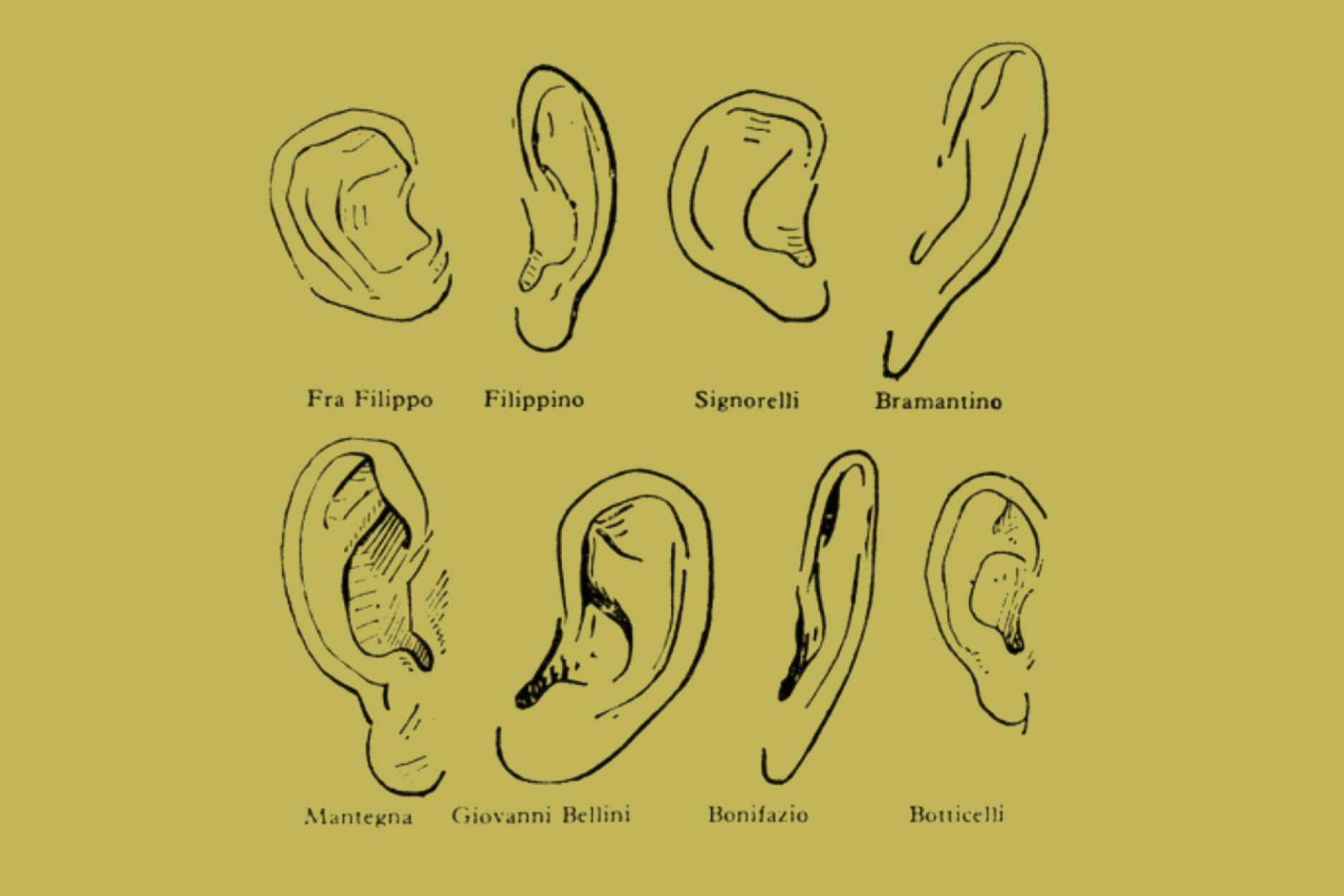

7. Giovanni Morelli. 1892. Italian Painters: Critical Studies of their Works. London: John Murray; pp. 77-8

8. Filter giving a “Jewish nose” by Maayan Sophia Weisstub. “Jewish Filter –‘Do I Look Like a Jew?’”; retrieved from https://weisstub.com/works/other/ (last access 6 November 2024)